Can AI make music that people want to listen to?

Of course yes, but not yet.

Short updates:

We saw the jazz harpist Brandee Younger with Macaya McCraven at last year’s Chicago Jazz Festival, but missed her free show at the Museum of Contemporary Art back in July because it was the day after Béla Fleck’s Millennium Park concert. She played another free show, this time at the Hyde Park Music Festival. We got to see her for 30+ minutes before we had to go home, and it was great. There’s no one quite like her at the moment.1

10/19-20 is Open House Chicago, a great excuse to explore Chicago neighborhoods. One thing we’re planning to do this year is to visit 2 Ukrainian Catholic churches2 in Ukrainian Village and get lunch at Tryzub Ukrainian Kitchen.

Perhaps unwisely looking beyond the birth of another child, we are low-key planning a trip to visit Julie’s parents in Osaka sometime in the middle of next year. The timing is partly so the younger kid is not walking yet, and partly for Expo 2025, being held near the in-laws’ place.

Sometime last year, I started noticing songs on Spotify that I suspected were made using generative AI. After a few of them, I started keeping a playlist of such songs that I encountered.3

The first one I have in the playlist is “Tinging” by Khukzuk Zendogiu—by the way, I’m writing out the information here so you can open up an incognito window to play it, instead of contaminating your recommendations.

From the music alone, you may or may not feel particularly suspicious. It seems like whoever’s playing can’t keep the beat sometimes, but that could be for effect…or they might just be bad musicians.

But note the strange artist name. Not unpronounceable, but very foreign4. When you Google “Khukzuk”, you only get this artist, and when you Google “Zendogiu”, you only get this artist. So it doesn’t seem like a regular person’s name.

More importantly, all the webpages you see are the pages for songs that are supposedly by this artist, on different platforms (Spotify, last.fm, Soundcloud, etc.). Basically, this is an artist who has no internet presence other than on streaming platforms. No Instagram, no Facebook, certainly not their own website, and no concert info.5

Spotify lists the source of this song as IncazeLabel. Googling doesn’t give me a website for this label, but it turns up this list of songs that got played on a radio show. They played a song called “Infrahumans” by Clats Pesmygz—somehow an even less likely name than Khukzuk Zendogiu—also released by this IncazeLabel. For me, this song really hits the uncanny valley, which I didn’t even know existed for music until recently.

These are songs released by seemingly not-real artists through a seemingly not-real label.

So is this, like, illegal?

Possibly. There’s at least one person who’s been charged with stealing royalties by uploading AI-generated songs and having bots play them.

“Tinging” has more than 360k plays on Spotify, and “Infrahumans” has more than 200k. Everyone knows—or they should know—how little musicians make from 1 play on these platforms, and each of these songs would probably be making measly tens or hundreds of dollars. But who knows how many songs have been posted by whoever is behind this, under how many artists?

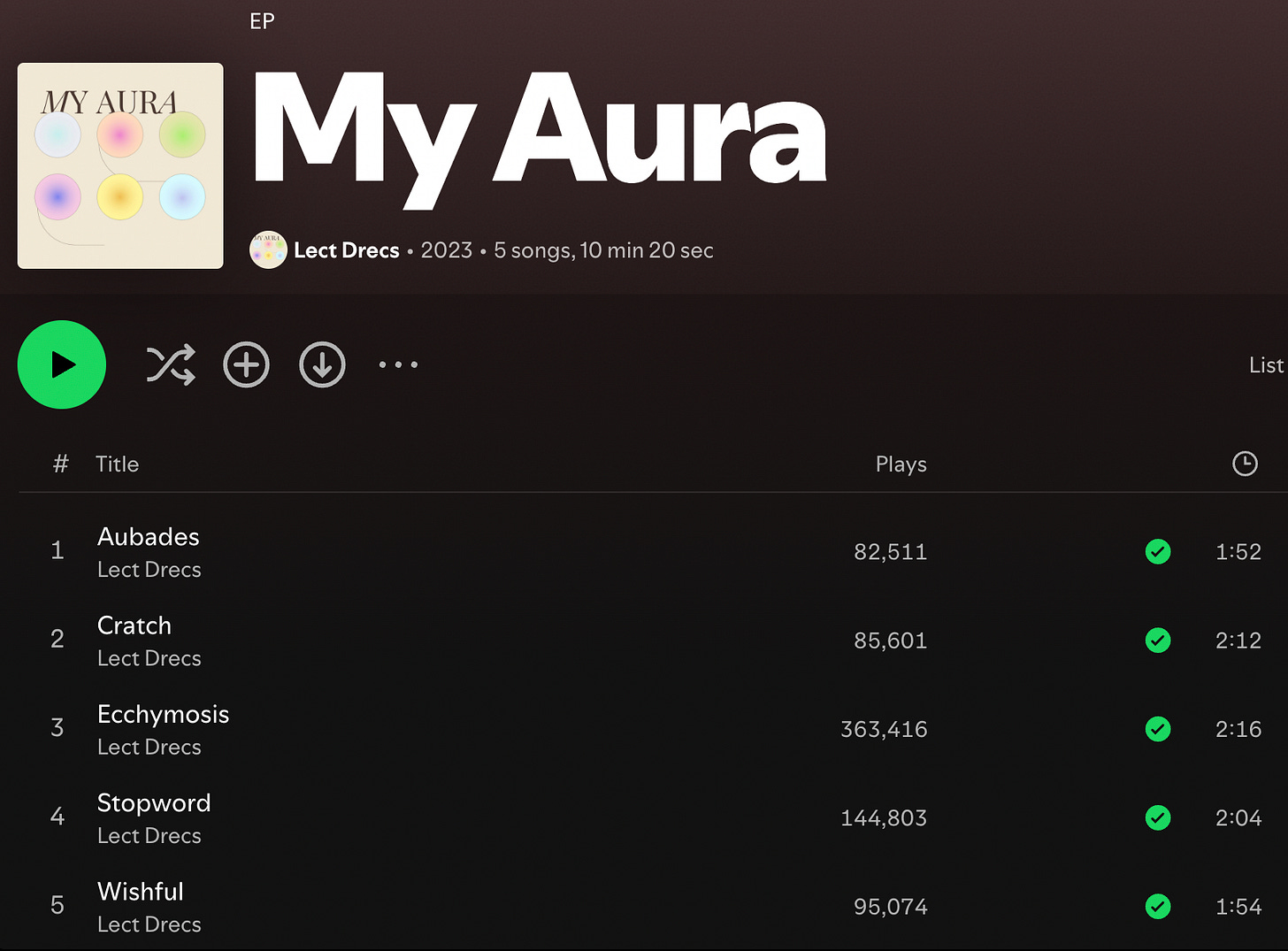

Let me give you one example where I know the artists are fake. Below are 2 EPs of 5 songs each. 2 weird band names and mostly weird song titles.

“Stopword”6 by Lect Drecs and “Hayed” by Fruveegsea have the same lengths, and in fact, they are identical songs. One of these entities is misrepresenting something, and most likely both. This discovery reminded me of this one.

My experience with listening to these songs is that sometimes I do like how they start, then after 10-20 seconds, I start to suspect something is amiss. So I dig into the provenance, and find…no provenance, as you saw above. So I would not be surprised if there’s an AI-generated song, or a few, that snuck into my Spotify library at this point.

If you like ambient-ish music with no lyrics and amorphous beats, and especially if you like streaming playlists that you didn’t create, I would guess that you’ve unwittingly listened to AI-generated music already.

I have no direct proof that the songs above are AI-generated. Circumstantial evidence suggests, though, that the people who uploaded these songs are simply interested in getting as many streams as possible on these platforms at the lowest cost, which suggests that they didn’t hire human musicians—or, God forbid, put in practice so they can make music themselves.

State of AI music in 2023

But just to be safe, what does music that we know to be AI-generated sound like? One caveat for everything that follows: I’ll focus on the technical feasibility of making music using AI, and will ignore all other related issues such as intellectual property, whether it’s good for human musicians, etc.

Here’s a post talking about Suno. It’s one of many services that let people generate music by entering written prompts—think of it as a music version of ChatGPT.

The author posts some songs that he was able to generate on his own—I do recommend listening to some of the songs in the post and songs on the Suno website, and maybe even trying to generate some songs yourself. He then writes, “Now I know what you’re thinking: Yes, these songs aren’t great. But here’s the thing: They’re not terrible either.”

My first comment to that is, these songs are pretty bad. I definitely prefer it to most of the music that I have in my suspected AI music playlist, because there’s no terrible technical issues with the music. But there really isn’t much here other than a collection of musical clichés. One reason I want you to try to generate some songs yourself is so you can try getting a song that’s not full of clichés. I can’t say I succeeded.

The author is correct when he points this out:

But remember how there were complaints that the first AI image generators couldn’t get the number fingers right? Or when the first deepfakes wouldn’t blink? We don’t hear those complaints anymore because the technology very rapidly improved.

Just like AI image generators improved quickly, I do think music generating AI will improve over the next few years.

But where does that get us?

The biggest use of AI images right now seems to be as a replacement for stock images. Even if you can’t draw well yourself, you now can make a somewhat realistic picture of some scenario that you have in mind that is much more appropriate than what you can easily find on the internet. And even if you can draw well yourself, it’s much less effort to use an AI if you don’t care that much about things like artistic quality and originality. When you make the analogy to music, this sounds like you’d be using AI to make background music.

Looking over at the written text, I’m aware that there’s corners of fiction where human authors are using AI to help them write, but ChatGPT is very far from generating a decent novel on its own. At the moment, it’s much better at generating generic text that expresses something with reasonable skill than at generating something with literary quality. Same conclusion as above when you make the analogy to music.

Why this is hard

A machine learning model that generates music has to learn patterns that exist in its training dataset, which is a huge database of existing songs performed by humans. How such a model represents music is an interesting topic that I might get into in the future, but the main point I want to make for now is that the model has an easier time learning common patterns than rare patterns.

The model is likely to have a much larger volume of piano music in its training dataset than, say, harp music. So you’d expect its piano music to be better in many ways than harp music. Similarly, the dataset contains a huge amount of mediocre—or, if that sounds too negative, average—music, compared to the amount of great music. So you’d expect it to generate mediocre music more readily than it generates great music.

Now, it’s totally possible to make these models generate things that are not typical. Speaking extremely broadly7, the question answered by models that generate text or music is, what is the probability for getting a particular item (word, musical note, etc.) at a particular point in the sequence? It’s relatively easy to tell the model to adjust the probabilities so that the output includes things that aren’t common.

The real problem here is not that you can’t make AI create atypical things, but that being atypical almost always takes you away from being great. If you have an orchestra of people who haven’t played their instrument before, you get very atypical music that is also hilariously bad. Great music is not only atypical, but atypical in all the right ways.

So you need a way to weigh the probabilities so that whatever makes better music becomes more likely to be generated. One way to do this would use some sort of objective metric, like the number of streams on Spotify or YouTube, and bias the probabilities toward things that appear in songs with higher streaming counts. This should get the AI to make music that approximates popular songs better in some way. Another way would be to include human judgement in the process. We know that large language models are trained with human input. Companies that make these models are pretty cagey about what they do exactly, but I’m sure they’ve already been trying something along these lines.

Are methods like these enough to give us great AI music, or even just an annoying yet catchy hit song? Maybe. Or maybe we need something drastically different to get there.8 But nothing in the problem suggests to me that it’s impossible to get a computer algorithm to generate great music.

So, as in many things about the future, I’m pessimistic in the short term and optimistic in the long term for pure AI music. Pretty soon, though, we’ll start seeing more “centaurs”—humans and AI working together to create music. What exactly will come out from that, I won’t even try to predict here.

What I’m listening to now

For your palate (eardrum?) cleanser, here’s some extremely not-AI music.

KOKOKO! is an experimental electropop band from Kinshasa, the capital of the Democratic Republic of the Congo. Until recently, the only Congolese bands I was familiar with were Franco & le T.P OK Jazz, who played African rumba decades ago, and Jupiter & Okwess, who I’ve actually seen live9 but are based in Europe. But Kinshasa is a mega city with lots of music.

KOKOKO! starts with Congelese melodies and rhythms, which are already great. Then the electronics add so much frenetic energy, as do the vocals that are, for lack of a better term, shouty. My favorite track is “Bazo Banga”.

In the past, there have been 2 prominent jazz harpists in Dorothy Ashby and Alice Coltrane, who was an accomplished jazz pianist before she started playing the harp. Here’s an article about the Lyon & Healy harp factory at Lake and Ogden in Chicago that includes the story of how Alice Coltrane got her harp.

St. Nicholas’s has a webpage on its history, which includes an explanation for why there’s 2 Ukrainian Catholic churches in very close proximity. TL;DR, one church uses the Gregorian calendar and the other uses the Julian.

On a separate note, there used to be a lot more “spam” songs that would show up on my Release Radar playlist, where a totally unknown artist would add a famous artist—or ten—as being on a song, presumably to have the song show up as the big artists’ latest release and get played by a bunch of people. Spotify seems to have cleaned this up.

I, Satoru Inoue, live in a glass house on this particular hill.

At least Satoru and Inoue are both common Japanese names—Inoue is the 16th most common family name in Japan. There was even a decent sprinter named Satoru Inoue that I watched growing up.

I guess this post might start showing up.

The title “Stopword” is really on the nose if you know what it means.

Some music AI models are transformers, which are the “T” in GPT, and other related models for sequential data. Others are diffusion models, which have been very successful in image generation. I don’t think I can be more specific than what I wrote in this sentence without being wrong about one of these types of models.

This fascinating experiment in getting ChatGPT to make art does make me think we might need something drastically different.

We went to see Noura Mint Seymali, but were blown away by them instead. The most fun I’ve had at a Millennium Park concert.

FWIW, I did basically as well as random in this AI art Turing test (https://www.astralcodexten.com/p/ai-art-turing-test). My excuse is that I was doing it in a rush (before I had to wake up the kid to get ready for daycare). There's definitely ways to do this much better than random, which some people have shared in the comments. At the same time, the fact that it requires some specific knowledge to distinguish between human and AI art suggests that it's already passing the Turing test.

And I just came across this one ("Treatment" by Jo Patience...supposedly). It might be more convincing than anything I've heard from Suno.

https://www.youtube.com/watch?v=uzm8NuzrQtw